Other recent blogs

Let's talk

Reach out, we'd love to hear from you!

By 2026, AI will no longer be a boardroom experiment or a shiny pilot project. It is expected to deliver measurable business outcomes. Yet across enterprises, a familiar pattern persists: promising AI proofs of concept that never reach production. The root cause is rarely model accuracy or lack of ambition. It is an architectural mismatch.

Organizations either overbuild, deploying complex AI agent orchestration where simple automation would suffice, or underbuild, forcing legacy rule-based tools into workflows that demand reasoning, context, and judgment. Both paths lead to rising costs, fragile systems, and technical debt that compounds over time.

The real differentiator between AI programs that scale and those that stagnate is not the choice of model, but the choice of AI Agent Architecture. Selecting the right architectural tier for the right problem determines resilience, ROI, and long-term maintainability. This blog presents a practical and field-tested decision framework to help enterprises move from AI experimentation to AI production with confidence. At Kellton, as an AI-first digital transformation partner, we use the decision framework to help our clients move from “AI experimentation” to “AI production” with maximum ROI and minimum technical debt.

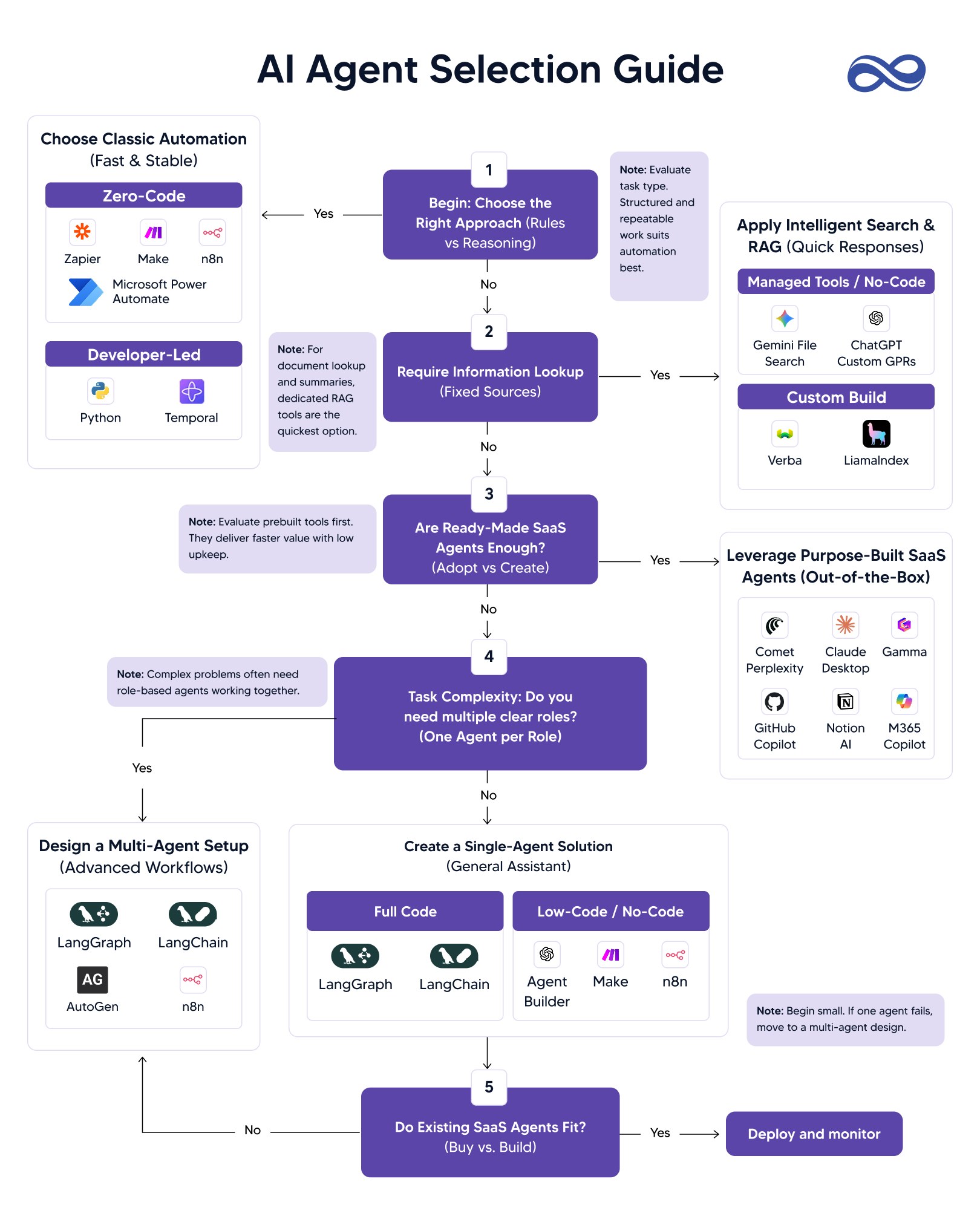

Quick steps for choosing AI Agent Architecture

.jpg)

1. The “Rules vs. Reasoning” litmus test

The first question isn’t “Which model should we use?” but “Does this task actually require intelligence?” In my experience, 60-70% of workflows are deterministic—think invoice routing or data syncing. Here, Classic Automation reigns supreme.

If a process is structured, repeatable, and follows a strict “if-this-then-that” logic, tools like Zapier, Make, or Python-based Temporal workflows offer 100% predictability and lower compute costs. Zapier handles 50 billion tasks yearly with zero hallucination risk; Temporal ensures fault-tolerant orchestration at pennies per execution.

Tip: Don’t waste “tokens” on logic that can be solved with “code.” Use LLMs for the messy, unstructured gaps in your workflow, not the stable bridges. I've advised Fortune 500s to hybridize: code the core, AI the edges—slashing costs 40% while boosting reliability. Consider a procurement workflow: Classic Automation approves POs and only escalates anomalies to reasoning agents. This prevents AI Agent Architecture bloat from day one.

3. Leveraging RAG for Immediate Velocity

If your primary hurdle is information retrieval—asking questions of your internal wikis, PDFs, or databases—don’t build an “agent” yet. Implement Retrieval-Augmented Generation (RAG).

- Managed Tools: Gemini File Search or ChatGPT Custom GPTs are excellent for rapid internal deployment.

- Custom Builds: For enterprise-grade security and complex data structures, frameworks like LlamaIndex allow for nuanced data indexing that off-the-shelf tools can’t match.

3. The “Adopt vs. Create” strategy

Before writing a single line of code, it is essential to assess the maturity of the SaaS AI ecosystem. The “buy” side has evolved significantly, often delivering a 3–5× lower total cost of ownership than custom builds once ongoing maintenance, security patching, model updates, and scaling costs are factored in.

In productivity workflows, tools like Microsoft 365 Copilot now automate a substantial share of everyday office tasks, while Notion AI converts unstructured notes into actionable insights. For engineering teams, GitHub Copilot has demonstrated measurable gains in developer velocity, with internal studies showing productivity improvements of over 50%.

In research-heavy workflows, solutions such as Perplexity serve as always-on intelligence layers, accelerating discovery without the overhead of building and maintaining custom agents.

4. Single-Agent vs. Multi-Agent orchestration

When SaaS solutions and simple AI enhancements reach their limits, the next decision is the cognitive complexity of the system’s “brain.” This determines how much reasoning, coordination, and autonomy the AI must handle.

Single-agent architectures are best suited for general-purpose assistance and well-bounded tasks. A single agent interprets the input, reasons over it, and produces an output in one continuous flow. These are easier to design, test, and govern, and can be implemented quickly using no-code tools such as OpenAI’s Agent Builder or full-code frameworks like LangChain when greater customization is required.

Multi-agent architectures represent the next level of sophistication and are appropriate for complex, multi-step business processes. In these systems, different agents specialize in distinct roles—for example, one agent gathers and validates data, another generates content or recommendations, and a third enforces policy or compliance checks. Orchestration frameworks such as LangGraph or AutoGen enable these agents to coordinate, share context, pass state, and even review or correct each other’s outputs.

While multi-agent systems can unlock powerful automation, they also introduce additional design and operational complexity. As a result, they should be adopted only when the business value clearly justifies the added overhead.

AI Agent Architecture Decision Tree: Your Path from Automation to Orchestration

Choosing the right AI agent architecture does not require starting with the most advanced system. It requires progressing through the right levels of intelligence, based on the nature of the task and the value it delivers. This decision tree visually maps how enterprise workflows evolve—from deterministic automation to intelligent, orchestrated agent systems—without unnecessary complexity or technical debt.

The AI Agent Architecture decision tree makes one principle clear: progression should be deliberate, not aspirational. The goal is not architectural sophistication, but resilience at every layer.

Start at the base of the tree by solving deterministic needs—use RAG to ground AI responses in enterprise data and eliminate knowledge friction. Move up only as required, automating peripheral and rule-driven workflows with no-code or low-code tools. Reserve the top of the tree—multi-agent orchestration—for core business processes where coordination, reasoning, and measurable ROI justify the added complexity.

By scaling intelligence in layers, organizations avoid premature over-engineering while preserving a clear, governed path from automation to orchestration.

Conclusion: Let Kellton architect your AI success

Is your organization struggling to decide between custom-built AI and SaaS-led AI? The proof is in production: We've transformed 50+ enterprises, auditing workflows, and mapping to optimal AI Agent Architecture tiers.

At Kellton, we’ve helped 50+ enterprises move past the hype by auditing real workflows and mapping them to the right AI Agent Architecture tier from day one. As an AI-first digital transformation partner, we don’t start with tools; we start with fit. We identify where rules are enough, where reasoning is required, where RAG unlocks immediate value, where SaaS delivers lower TCO, and where agent orchestration truly earns its place.

The result is not theoretical. Our framework consistently enables 3× faster go-live, up to 40% cost optimization, and measurable ROI from the first deployment, without accumulating long-term technical debt. If you’re done with AI hype and ready for systems that actually scale, let’s talk

Kellton can dissect your workflows, simulate the AI Agent Architecture decision tree, and fast-track you to a production-ready blueprint that carries your AI investments confidently through 2026 and beyond.