Other recent blogs

Let's talk

Reach out, we'd love to hear from you!

Modern-age enterprises are becoming data-native. They are collecting information from millions of touchpoints across customers, operations, and devices to convert that extracted data into measurable business outcomes. Every department, from marketing to operations, wants data-driven decisions, yet many struggle to scale beyond isolated proof-of-concept models. The reason? A lack of structured, governed, and well-managed data science workflows.

Indeed, this journey requires a disciplined and end-to-end data science workflow. The reason - the absence of a standardized process can cripple the data analytics team with slow experimentation cycles, inconsistent data pipelines, and deployment bottlenecks. The strategic workflow management will not only act as a technical blueprint but also work as a strategic lifecycle to help organizations ensure that every model built in a lab can scale, integrate, and deliver real-world value in production.

From defining the right problem to maintaining deployed models, each stage represents a critical checkpoint in enterprise data maturity. This is why CIOs and data leaders are now rethinking how to operationalize and accelerate the end-to-end data science workflow management lifecycle, which begins as a technical exercise and becomes a repeatable business capability.

In this blog, let’s explore how organizations can manage, accelerate, and optimize their AI-powered data workflows to deliver measurable business outcomes.

What is Data Science Workflow Management?

A data science workflow defines the structured series of steps that transform raw data into deployed, actionable

models — from problem definition to production monitoring. However, as enterprise analytics ecosystems scale, managing this workflow becomes a discipline of its own: data science workflow management.

The workflow management lifecycle typically revolves around the frameworks, tools, and governance practices that analytical teams leverage to orchestrate, monitor, and automate every stage of the data science lifecycle. Additionally, it ensures that projects move systematically from exploration to execution — without bottlenecks, redundancy, or compliance risk.

Data Science workflow brings together three layers of control:

- Process management – Defining repeatable workflows for data ingestion, model training, and deployment.

- Collaboration management – Enabling seamless coordination among data engineers, scientists, and business users.

- Governance management – Ensuring compliance, reproducibility, and traceability of experiments and models.

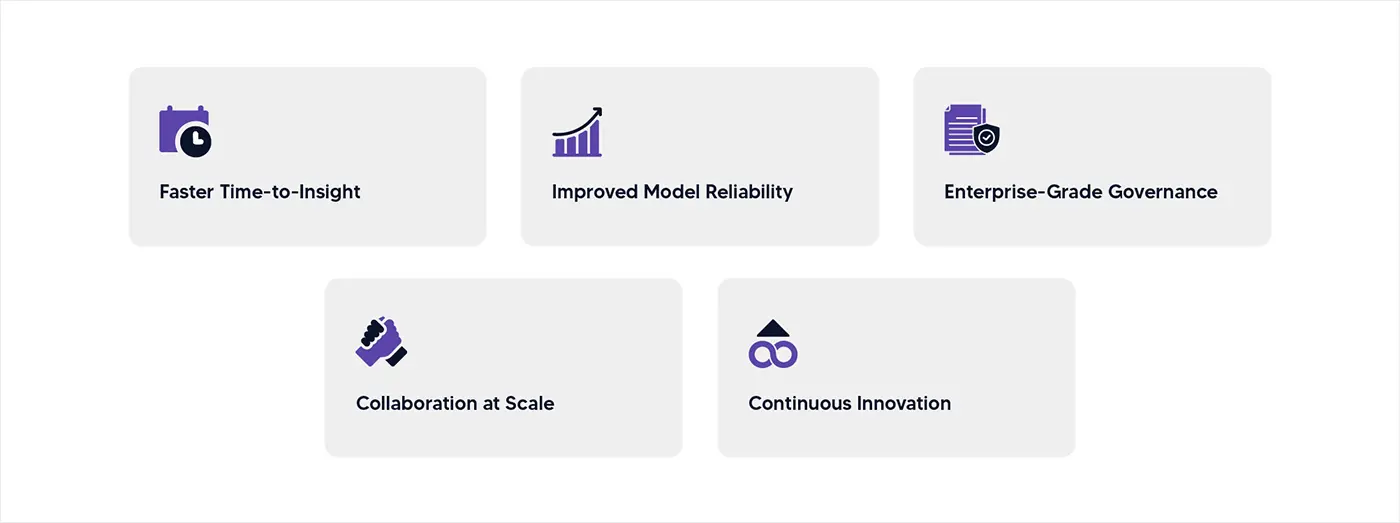

Benefits of Effective Data Science Workflow Management

A well-managed workflow isn’t just about efficiency — it drives business agility, compliance, and scalability.

Faster time-to-insight

Automation of repetitive tasks including data cleaning and model testing reduces cycle times considerably. Teams shift focus from manual processes to strategic analysis, enabling faster response to business questions. This acceleration shortens the gap between data collection and actionable insights, allowing organizations to capitalize on opportunities and address challenges with greater agility and competitive advantage. By eliminating tedious manual interventions, data professionals can dedicate more time to hypothesis generation, exploratory analysis, and creative problem-solving.

Improved model reliability

Centralized version control ensures models remain reproducible, auditable, and explainable which are essential requirements for regulated industries. This systematic approach eliminates ambiguity around model provenance and decision-making logic. Organizations gain confidence in deployment decisions while satisfying regulatory scrutiny. Documentation and tracking mechanisms provide clear accountability, enabling teams to un

derstand model behavior and justify outcomes to stakeholders and auditors.

Enterprise-grade governance

Workflows embed comprehensive data governance principles, ensuring robust security, clear lineage tracking, and compliance with evolving data privacy regulations. This integration transforms governance from afterthought to foundational element. Organizations protect sensitive information while maintaining transparency about data usage. Automated compliance checks reduce risk exposure, while audit trails provide evidence of responsible data stewardship throughout the entire analytical lifecycle.

Collaboration at scale

Cross-functional visibility helps data scientists, analysts, and engineers co-develop solutions without organizational silos hindering progress. Shared platforms and standardized processes enable seamless communication and knowledge transfer. Teams leverage collective expertise more effectively, reducing duplication of effort. This collaborative environment accelerates problem-solving while ensuring diverse perspectives inform solution design, ultimately producing more robust and business-relevant outcomes.

Continuous innovation

Workflow management enables parallel experimentation and continuous improvement without compromising system stability or operational reliability. Teams can explore multiple approaches simultaneously, learning rapidly from successes and failures. This iterative process fosters innovation while maintaining production integrity. Organizations balance exploration with exploitation, ensuring new capabilities emerge steadily while existing systems continue delivering value to stakeholders and customers.

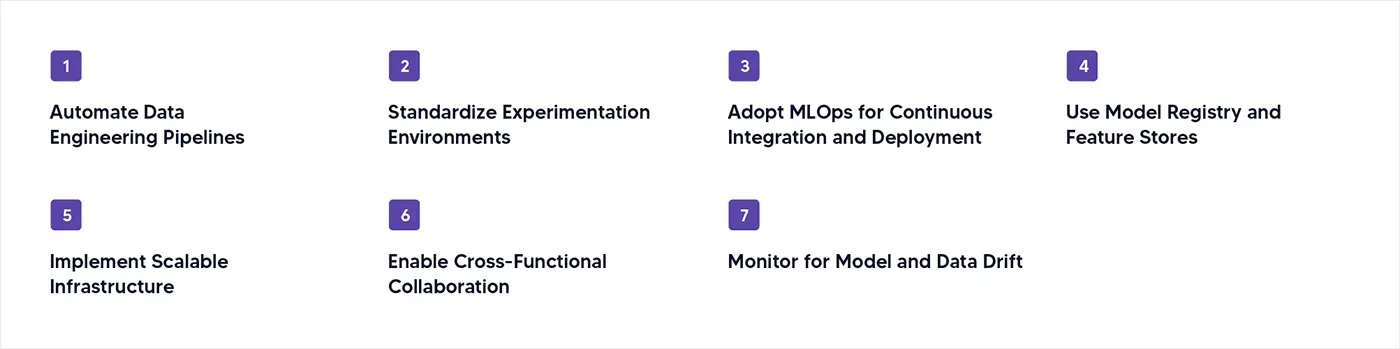

Accelerating end-to-end Data Science Workflows: Best practices for enterprises

The competitive advantage now lies in how fast you can go from data to decision. Accelerating end-to-end data science workflows means minimizing friction across every phase — from ingestion to model deployment — while maintaining accuracy, governance, and scalability. Here are the best practices that CIOs and data science leaders can adopt to accelerate their enterprise workflows:

1. Automate data engineering pipelines

Data preprocessing demands substantial project time and resources. Leveraging ETL automation, data cataloging, and metadata-driven transformations dramatically reduces manual overhead and accelerates delivery timelines. Tools like Airflow, Prefect, and dbt orchestrate these pipelines efficiently, enabling teams to focus on analysis rather than repetitive data preparation tasks that slow innovation.

2. Standardize experimentation environments

Inconsistent environments frequently cause models to fail during deployment, creating costly delays and frustration. Containerization through Docker and infrastructure-as-code approaches using Terraform ensure every model executes identically from laboratory to production. This standardization eliminates environment-related failures, streamlines troubleshooting, and builds confidence in deployment processes across development teams.

3. Adopt MLOps for continuous integration and deployment

Drawing inspiration from DevOps methodologies, MLOps introduces continuous integration and deployment pipelines specifically designed for machine learning models. This approach automates retraining cycles, version control management, and systematic rollout procedures. Frameworks including MLflow, Kubeflow, and SageMaker Pipelines serve as central platforms for accelerating model delivery while maintaining quality standards.

4. Use model registry and feature stores

A centralized model registry maintains comprehensive metadata, lineage tracking, and performance metrics for all organizational models. Feature stores complement this by preserving consistent feature definitions across projects. Together, these repositories eliminate redundant work, ensure enterprise-wide reusability of proven components, and accelerate development by providing teams immediate access to validated resources.

5. Implement scalable infrastructure

Cloud-native architectures and serverless computing capabilities enable resources to scale dynamically based on demand, eliminating infrastructure bottlenecks. Platforms like Databricks and Snowflake unify data storage with computational power, creating seamless end-to-end workflows. This integration accelerates processing, reduces latency, and allows teams to handle varying workloads without manual intervention or capacity planning.

6. Enable cross-functional collaboration

Collaborative workspaces such as JupyterHub and Databricks Notebooks allow engineers and business stakeholders to share experiments, discuss outcomes, and align on metrics within unified environments. Accelerating workflows transcends tools alone—it fundamentally depends on people working synchronously. These platforms break down silos, foster knowledge sharing, and ensure technical solutions address genuine business needs.

7. Monitor for model and data drift

Workflow acceleration without continuous monitoring inevitably leads to quality degradation over time. Integrated monitoring and alerting systems ensure model accuracy remains stable as real-world data patterns evolve. This proactive approach detects performance issues early, triggers necessary interventions, and maintains reliability. When implemented cohesively, these practices create agile data ecosystems where innovation accelerates while remaining governed, reliable, and business-aligned.

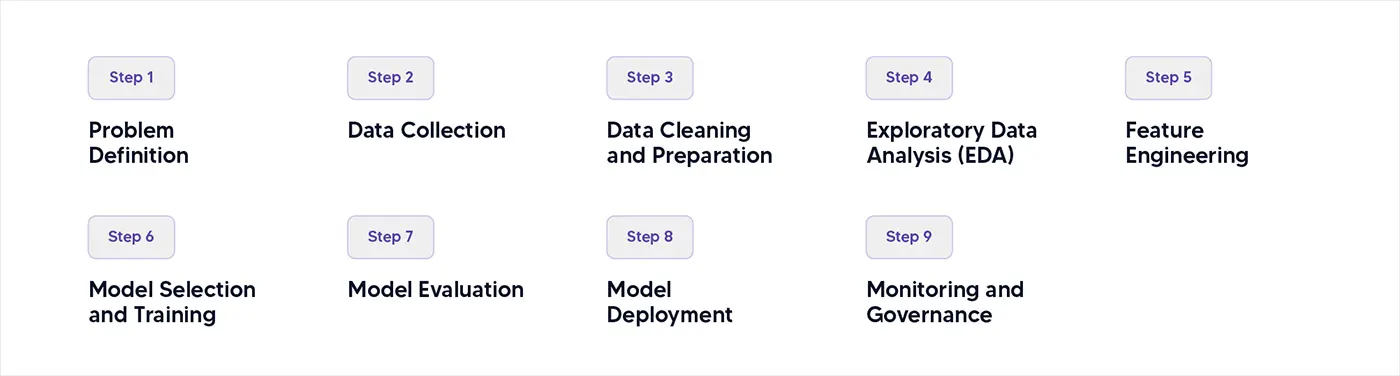

Data Science workflow lifecycle steps: A complete breakdown and how to use

Understanding the data science workflow steps provides a foundation for effective management and acceleration. Each phase represents a crucial checkpoint in converting raw data into operational intelligence.

1. Problem definition

Do you know the core pillar of every successful data science project stands firmly on “Clarity” of business objectives, i.e., what problem are we solving, and why does it matter?

At the executive level, the phase involves turning business objectives into quantifiable analytical goals. Rather than vague requests like “predict customer behavior,” teams can focus on defining measurable problem statements.

For Example: “Forecast 30-day customer churn probability with at least 85% recall accuracy.”

To achieve the outcome, the wise choice would be conducting stakeholder workshops to identify business KPIs and define success metrics that would include ROI impact, cost savings, and accuracy thresholds. Additionally, framing hypotheses that data can test is another way to align business goals with predictive analytics and determine feasibility — whether data exists to support the problem.

Establish a Business Requirements Document (BRD) or Data Science Problem Canvas to align data teams, domain experts, and business stakeholders from day one. This prevents “solution-first” projects that fail to connect with organizational value chains.

2. Data collection

Once the problem is identified and defined, the next challenge in the row comes is collecting the right data, at the right scale, with the right context.

In a typical organizational structure, enterprises source data from:

- Internal systems: ERP, CRM, transaction logs, IoT sensors.

- External sources: Open data repositories, third-party APIs, demographic datasets.

- Streaming data: Event-driven systems, clickstreams, telemetry feeds.

It is crucial to understand that data acquisition is not merely a technical step but is governance-intensive. So, the team must ensure data privacy and compliance, including GDPR, HIPAA, and the DPDP Act in India. Also, an API-driven architecture must be given equal importance for seamless ingestion. Cloud-based data lakes or lakehouses like AWS S3, Databricks, and Snowflake are other crucial elements for scalable storage.

Data teams can adopt ELT (Extract, Load, Transform) pipelines by using tools like Fivetran or Airbyte. The tools help ingest raw data first and then transform it downstream for analytics flexibility.

3. Data cleaning and preparation

It is evident that even the most sophisticated algorithms fail if the data feeded to them is inconsistent. This is why data experts swear by data preprocessing which perfectly converge both data engineering and data science.

The core preprocessing chain includes handling missing values via imputation or interpolation, removal of duplicates, encoding categorical variables, normalizing and scaling numerical features. Also, the process is incomplete without managing data imbalance (e.g., SMOTE techniques for skewed classes).

Data quality directly impacts model reliability and here’s the proof: Gartner estimates that poor data quality costs enterprises up to $12.9 million annually. Hence, enterprises are increasingly mandating automated data validation frameworks and data contracts between teams.

4. Exploratory Data Analysis (EDA)

Exploratory Data Analysis represents far more than visual storytelling—it serves as the critical diagnostic phase of data science. During this stage, analysts and data scientists conduct thorough investigations into the statistical structure, relationships, and anomalies present within datasets.

The primary objectives center around understanding how features are distributed and detecting outliers that might skew analysis, identifying correlations or multicollinearity between variables, testing hypotheses regarding how different variables influence the target outcome, and generating descriptive summaries suitable for executive reporting.

Visualization tools like Tableau, Power BI, and Python libraries including Seaborn and Plotly enable both technical and non-technical teams to grasp patterns intuitively. From a leadership perspective, organizations should encourage reproducible EDA using notebooks such as Jupyter or Databricks that integrate into version control systems like Git. This approach ensures analytical transparency and provides the auditability necessary for compliance reviews.

5. Feature engineering

Feature engineering—the art of transforming raw data into meaningful inputs—often stands as the determining factor between a model's success or failure. This process encompasses several types of transformations, including domain-derived features where analysts create new variables such as customer tenure calculated from join date and current date.

Interaction features combine multiple variables, exemplified by multiplying product price by quantity. Temporal features extract time-based patterns including seasonality or recency, while dimensionality reduction techniques like PCA simplify complex datasets into more manageable forms.

From a strategic perspective, high-performing organizations institutionalize feature stores—centralized repositories where reusable, validated features undergo version control and become shared assets across models. This practice not only accelerates delivery timelines but ensures consistency across business units. Tools powering these feature stores include open-source options like Feast, as well as commercial platforms such as Tecton and Databricks Feature Store.

6. Model selection and training

While modeling often receives glamorization, model selection in practice involves careful trade-offs between interpretability, scalability, and performance. Typical model families span supervised learning approaches including regression, decision trees, ensemble methods, and neural networks. Unsupervised learning encompasses clustering techniques and dimensionality reduction, while advanced architectures include transformer models, graph neural networks, and reinforcement learning.

Key considerations revolve around how business context determines acceptable complexity levels, recognition that regulatory environments may demand explainable models particularly in sectors like financial services, and infrastructure scalability considerations regarding whether models can handle production load. Training practices typically involve splitting data into training, validation, and test sets, with cross-validation ensuring generalization capabilities. Hyperparameter tuning through Grid Search or Bayesian optimization refines model performance further.

7. Model evaluation

Before deployment, models undergo both quantitative and qualitative evaluation to answer one crucial question—does the model meet business success criteria? Common evaluation metrics for classification tasks include precision, recall, F1-score, and ROC-AUC. Regression models are assessed using MAE, RMSE, and R², while business metrics focus on tangible outcomes like uplift in conversion rate, reduction in churn, and cost savings.

Evaluation depth requires using confusion matrices and ROC curves for visibility into model performance, conducting A/B testing for real-world validation, and evaluating fairness and bias using specialized tools. From a governance viewpoint, organizations should establish a Model Risk Management framework that ensures models undergo review for accuracy, bias, and interpretability before receiving approval. This practice has become standard in regulated sectors including banking and healthcare.

8. Model deployment

Deployment transforms data science from theoretical exercise into enterprise capability, representing the stage where models transition from Jupyter notebooks to production pipelines. Deployment strategies vary based on use case requirements. Batch inference involves periodic predictions such as nightly churn scoring, while real-time inference delivers instant predictions through REST APIs or event-driven triggers. Streaming or edge deployment addresses low-latency scenarios encountered in IoT or autonomous systems.

Technologies enabling deployment include Flask, FastAPI, or Django for building model APIs, Docker containers and Kubernetes clusters for scaling operations, and specialized platforms like MLflow, Seldon, or TensorFlow Serving for model versioning and management. From an executive perspective, a well-deployed model integrates seamlessly with existing business systems including CRMs, ERPs, or decision support platforms, delivering actionable intelligence at scale.

9. Monitoring and governance

After deployment, model performance remains dynamic rather than static. Data drift, concept drift, or changing external conditions can gradually degrade accuracy over time. Continuous monitoring encompasses several critical areas including performance drift detection that tracks changes in prediction accuracy, data drift monitoring that compares input feature distributions over time, and latency and uptime tracking to ensure production reliability.

Strategic implementation requires a full MLOps lifecycle featuring automated retraining triggers, CI/CD pipelines, and feedback loops. Platforms such as Kubeflow, MLflow, or AWS SageMaker Pipelines help operationalize these processes effectively. Governance controls should include comprehensive model documentation and explainability logs, approval workflows for redeployment decisions, and role-based access control for both data and models. This creates an ecosystem of Responsible AI that balances innovation with accountability.

How Kellton’s Data Science Services empower workflow management?

Kellton helps enterprises navigate the complexity of modern data ecosystems by providing end-to-end data science services that bring speed, governance, and scalability to their workflows. Our experts design and implement frameworks that standardize workflows across discovery, experimentation, and deployment. This ensures that every initiative, whether AI-driven demand forecasting or predictive maintenance, aligns with your business goals and compliance requirements.

We build MLOps pipelines, establish data governance frameworks, and enable automated retraining systems — so your data science workflow operates like a well-oiled production line, not a fragmented process.

Using cloud-native architectures (AWS, Azure, GCP), modern data stacks (Databricks, Snowflake), and orchestration tools (Airflow, MLflow, Kubeflow), Kellton helps clients accelerate end-to-end data science workflows — from ingestion to inference.

Our agile methodology ensures quick experimentation and scalable deployment while maintaining compliance, version control, and full traceability. Whether you’re modernizing legacy data systems or scaling enterprise AI initiatives, Kellton’s data science services can help you design, automate, and manage workflows that consistently deliver value.

By partnering with Kellton, enterprises can streamline data-to-decision lifecycles, standardize ML model management, ensure faster deployment and ROI realization, along with responsible governance built-in. The result? Data science at enterprise velocity — faster, smarter, and always aligned with business outcomes.