Other recent blogs

Let's talk

Reach out, we'd love to hear from you!

When a multinational healthcare organization discovered that its cloud-stored data remained unmonitored and unclassified, the core team realized they were sitting on a ticking time bomb. Their data lake that was designed to superpower AI-driven diagnostics and health insights had actually turned into a security blind spot where sensitive patient information mingled with operational data. Another grave issue was that everything was accessible through overly permissive credentials that hadn't been reviewed in years.

The core team understands this incident poses a critical security risk with considerable financial exposure. The global average cost of a data breach last year was 4.4M, with 97% organizations spending their resources on AI-related security incidents, preventing the AI shadow proliferation and identifying phishing-resistant authentication breaches. As enterprises race to harness data for competitive advantage, the challenge isn't just collecting information—it's securing it.

At Kellton, we've helped organizations transform their data lake security from reactive checkbox exercises into proactive defense systems that prevent breaches before they happen. This blog explores our approach to data lake security challenges and how we addressed them while enabling innovation.

How did Kellton's data lake security practices prevent a major breach?

A global healthcare analytics firm approached Kellton facing a critical challenge: their AWS-based data lake stored sensitive patient information for population health studies, but their security posture had not evolved with their rapid data growth. The organization processed medical records for over fifteen million patients across multiple countries, supporting research into treatment efficacy and disease prevention. However, their access controls relied on overly broad IAM policies, encryption was inconsistently applied, and audit logging provided insufficient visibility into data access patterns.

The turning point came when their internal audit team discovered that multiple service accounts had excessive permissions, contractor access had not been revoked after engagement completion, and several storage buckets containing protected health information lacked encryption. Recognizing the magnitude of potential HIPAA violations and the reputational damage a breach would cause, leadership engaged Kellton to architect a comprehensive security transformation. Our team conducted a thorough security assessment, identifying twenty-three high-risk vulnerabilities and fourteen compliance gaps that required immediate remediation.

Kellton's approach began with implementing AWS Lake Formation as the security foundation, replacing fragmented IAM policies with centralized, fine-grained access controls. We designed a role-based access model that aligned with the organization's research workflows, ensuring data scientists could access de-identified datasets for analysis while restricting personally identifiable information to authorized personnel. Column-level security masked patient names and contact details from general research queries, while row-level security partitioned data by geographic region to support local compliance requirements.

What role does data lake security play in modern data architecture?

Data security in the modern data-first landscape has evolved from a compliance checkbox to a strategic imperative that determines an organization's ability to innovate and compete. The global average cost per data breach increased 10% over the previous year to reach $4.88 million, marking the biggest jump since the pandemic. Data breaches with lifecycles of more than 200 days cost an average of $5.46 million, demonstrating how detection speed directly impacts financial exposure. The exponential growth of data volumes has fundamentally altered how organizations approach information protection.

Modern enterprises operate in a data-first paradigm where insights drive every business decision, from customer experience optimization to supply chain management. This shift has introduced complex challenges: data sprawl across multi-cloud environments, real-time processing requirements, and the need to balance accessibility with protection. Traditional perimeter-based security models have become obsolete as data moves fluidly between on-premises systems, cloud platforms, edge devices, and third-party applications.

Data lake security serves as the foundational layer in data lake architecture, providing the controls necessary to protect raw, structured, semi-structured, and unstructured data at rest and in motion. Unlike traditional data warehouses that enforce schema and structure upfront, data lakes store information in its native format, creating a flexible repository that supports diverse analytics and machine learning workloads. This architectural flexibility, however, introduces unique security considerations.

What is data lake security and why does it matter?

Security data lake implementations represent a comprehensive approach to protecting enterprise data repositories against unauthorized access, data exfiltration, corruption, and compliance violations. At its core, data lake security encompasses the policies, technologies, and processes designed to safeguard data throughout its lifecycle—from ingestion and storage to processing and consumption—while maintaining the openness and accessibility that makes data lakes valuable for analytics.

Data lake security differs fundamentally from traditional database security due to the nature of the data being protected. Data lakes aggregate information from hundreds or thousands of sources, including IoT sensors, application logs, transaction systems, social media feeds, and external datasets. This heterogeneity means security controls must adapt to varying data classifications, sensitivity levels, and regulatory requirements without manual intervention for each data source.

The security framework addresses several critical dimensions. Identity and access management establishes who can interact with the data lake and under what conditions, implementing role-based access control (RBAC) and attribute-based access control (ABAC) to enforce least-privilege principles. Data encryption protects information both at rest using AES-256 or similar standards and in transit using TLS protocols.

Network security isolates the data lake within virtual private clouds and implements firewall rules to prevent unauthorized network access. Audit logging captures every interaction with the data lake, creating an immutable record for compliance reporting and forensic analysis. Data lake security matters because the consequences of inadequate protection extend far beyond financial penalties. A breach can destroy customer trust, damage brand reputation, trigger regulatory sanctions, and expose intellectual property to competitors.

How do data lake security practices work in enterprise environments?

Data lake security practices operate through a defense-in-depth strategy that layers multiple security controls to create redundancy and resilience. The operational framework begins at the data ingestion layer, where security validation occurs before information enters the lake. Source authentication verifies that data originates from legitimate systems. In contrast, data classification engines automatically tag incoming information based on sensitivity—personally identifiable information, protected health information, payment card data, or trade secrets—enabling downstream security policies to apply appropriate protections.

Once data resides in the lake, encryption serves as the primary protection mechanism. Modern implementations use envelope encryption, where data encryption keys are themselves encrypted by master keys managed through hardware security modules or cloud key management services. This approach ensures that even if storage media is compromised, the data remains unreadable without access to the key hierarchy. Encryption extends to temporary data created during processing, preventing exposure through intermediate storage.

Access control implementation varies based on the data lake platform—AWS Lake Formation, Azure Data Lake Storage, Google Cloud Storage, or open-source solutions like Apache Ranger—but follows common principles. Policies define permissions at multiple granularities: entire databases, specific tables, columns containing sensitive data, or even row-level filters that restrict users to viewing only data relevant to their geographic region or business unit. These policies integrate with enterprise identity providers through protocols like SAML or OAuth, enabling single sign-on and centralized user management.

The operational advantages of robust data lake security practices manifest across several dimensions. Organizations achieve compliance automation, where policies automatically enforce regulatory requirements rather than relying on manual reviews. Data democratization accelerates as teams gain self-service access to datasets they need without creating security risks. Incident response improves dramatically through comprehensive audit trails that enable security teams to quickly identify the scope of a breach, affected data, and compromised accounts. Performance optimization becomes possible as security controls can cache authorization decisions and apply them at scale, reducing the overhead of permission checks on every query.

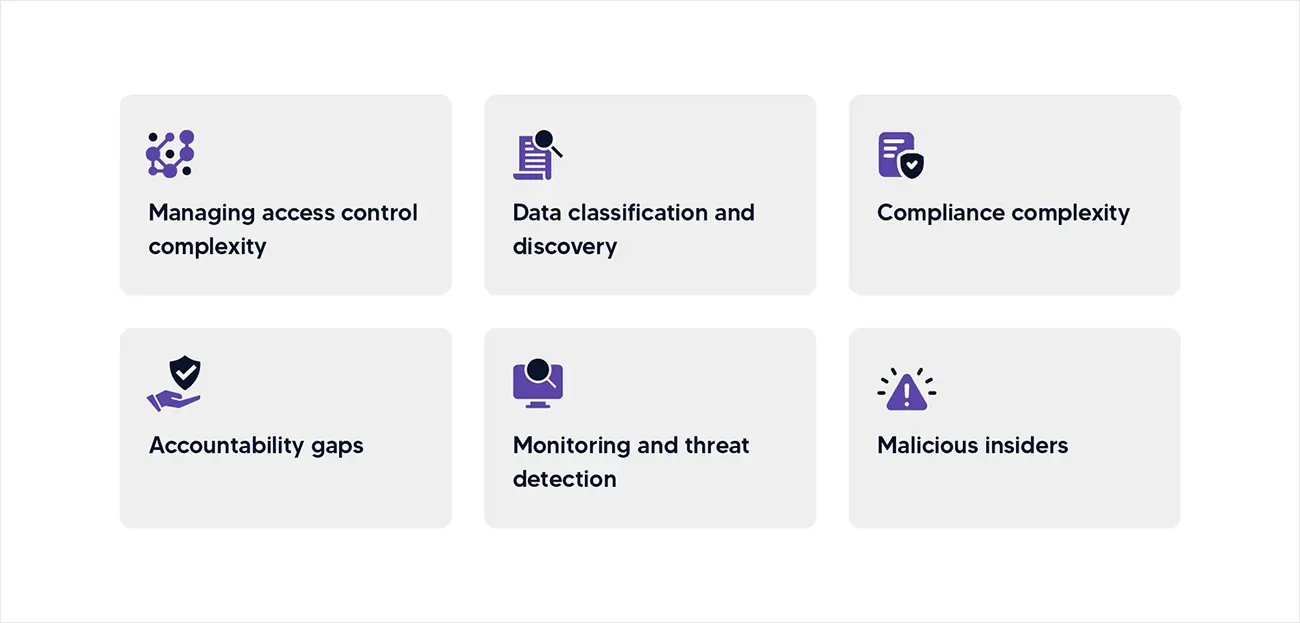

What are the critical security challenges of data lakes?

Data lakes' security challenges stem from their architectural characteristics and operational patterns, which create attack surfaces and vulnerabilities that differ substantially from traditional data management systems. Let’s understand the most pressing challenges for developing effective countermeasures:

Managing access control complexity:

A typical enterprise data lake contains thousands of datasets with varying sensitivity levels, accessed by hundreds or thousands of users and service accounts across multiple departments and partner organizations. As the lake grows, the permission matrix becomes increasingly complex—which users should access which datasets, under what conditions, and with what operations permitted. Without automated policy management, organizations struggle to maintain least-privilege access, often defaulting to overly permissive permissions that expose sensitive data. Permission sprawl occurs as temporary access grants become permanent, former employees retain credentials, and service accounts accumulate unnecessary privileges.

Data classification and discovery:

Unlike structured databases, where schemas explicitly define data types, data lakes contain files in diverse formats—JSON, Parquet, Avro, CSV, images, videos—with sensitive information potentially embedded in any field or document. Organizations must implement automated classification tools that scan petabytes of data to identify regulated information, but these tools face high false-positive rates and struggle with context-dependent sensitivity. A Social Security number in a test dataset poses risks different from one in production customer records, yet automated systems may treat them identically.

Compliance complexity:

Such complexities multiply as organizations operate across jurisdictions with conflicting data residency and sovereignty requirements. GDPR mandates that European citizens data remains in EU regions, CCPA grants California residents deletion rights, and numerous industry-specific regulations impose retention schedules and access restrictions. Data lakes that consolidate global information must implement geographic controls, data lineage tracking to support deletion requests, and retention policies that automatically purge data—all while maintaining operational analytics capabilities.

Accountability gaps:

The shared responsibility model in cloud environments creates accountability gaps. Cloud providers secure the underlying infrastructure, but customers remain responsible for data encryption, access policies, network configurations, and application-layer security. Many organizations underestimate this responsibility, assuming cloud providers guarantee data protection. Configuration errors—publicly accessible storage buckets, overly permissive security groups, disabled encryption—represent the majority of cloud data breaches, often discovered only after exposure.

Monitoring and threat detection:

This is one major problem that companies struggle due the volume and velocity of data lake operations. Security information and event management systems must process millions of log entries daily to detect anomalous access patterns, data exfiltration attempts, or privilege escalation. Distinguishing legitimate analytics workloads from malicious activity requires sophisticated baselines and machine learning models. A data scientist downloading a large dataset for modeling looks remarkably similar to an attacker extracting information, requiring contextual analysis of timing, location, access patterns, and data sensitivity.

Malicious insiders:

Insider threats pose particularly difficult challenges in data lake environments. Privileged users—data engineers, administrators, analysts—require broad access to perform their duties, making it difficult to restrict them without impeding operations. Malicious insiders can gradually accumulate data, exfiltrate information through authorized channels, or plant backdoors for later exploitation. Technical controls must be supplemented with behavioral analytics, data loss prevention tools, and organizational policies around data handling.

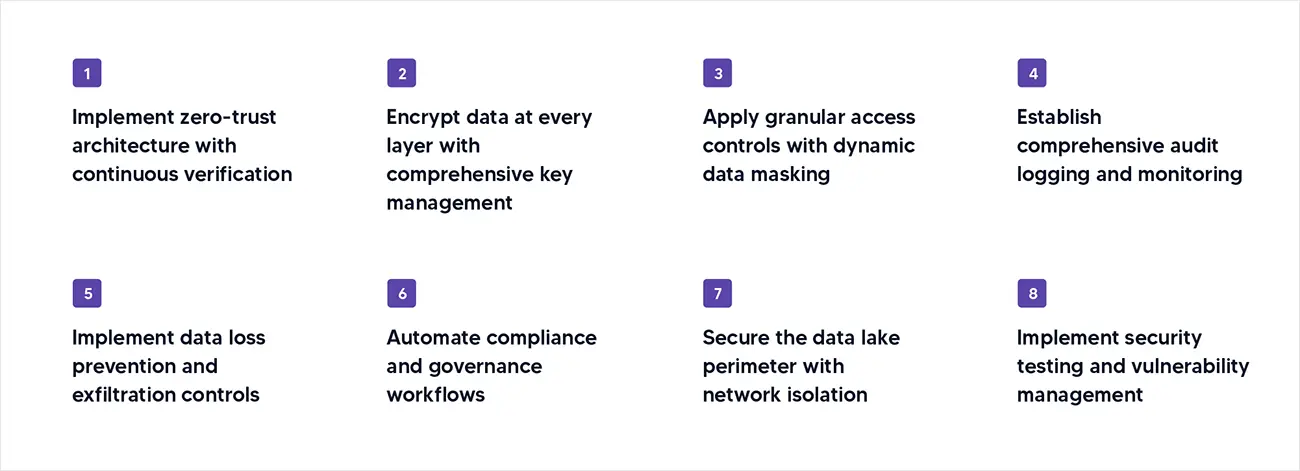

What are the most effective data lake security best practices?

Implement zero-trust architecture with continuous verification

Zero trust security represents a paradigm shift from perimeter-based defenses to a model where no user or system is inherently trusted, regardless of network location. In data lake contexts, zero trust mandates that every access request undergoes authentication, authorization, and continuous validation throughout the session. This approach assumes that breaches are inevitable and focuses on minimizing lateral movement and containing damage when compromise occurs.

Implementation begins with eliminating implicit trust relationships. Service accounts and applications must authenticate using short-lived credentials rather than static passwords or keys. Multi-factor authentication becomes mandatory for human users, preferably using phishing-resistant methods like hardware tokens or biometric verification. Network segmentation isolates the data lake within private subnets, with access controlled through identity-aware proxies that validate requests at the application layer rather than relying solely on network firewalls.

Continuous verification extends beyond initial authentication to monitor ongoing session behavior. Machine learning models establish baseline patterns for each user—typical access times, data volumes, query patterns, geographic locations—and generate alerts when deviations occur.

Encrypt data at every layer with comprehensive key management

Encryption forms the last line of defense when other security controls fail, ensuring that even if attackers gain physical access to storage media or intercept network traffic, the data remains unintelligible. Comprehensive encryption strategies address data at rest, in transit, and in use, with careful attention to key management practices that prevent encryption from becoming a single point of failure.

At-rest encryption protects stored data using strong algorithms like AES-256, applied at multiple levels. Volume-level encryption secures entire storage devices, providing protection against physical theft. Object-level encryption protects individual files within the data lake, enabling fine-grained control where different datasets use different keys based on sensitivity. Client-side encryption goes further by encrypting data before transmission to cloud storage, ensuring the cloud provider never possesses unencrypted information or encryption keys. This approach addresses regulatory requirements around third-party data handling and reduces trust requirements for cloud vendors.

Apply granular access controls with dynamic data masking

Fine-grained access control systems move beyond coarse permissions that grant or deny access to entire databases, instead implementing column-level, row-level, and cell-level restrictions that tailor data visibility to each user's role and clearance. This precision enables organizations to store sensitive data alongside general information while preventing unauthorized exposure.

Column-level security restricts access to specific fields within datasets, and implementation occurs through view definitions, query rewriting, or access control lists at the storage layer that automatically filter restricted columns before returning results. Additionally, Row-level security applies predicates to data queries, returning only rows that match user-specific criteria. Multi-tenant data lakes store information for multiple customers in shared tables, with row-level security ensuring each tenant sees exclusively their own records.

Dynamic data masking takes granular control further by showing sensitive fields in modified form rather than hiding them entirely. Social Security numbers appear as XXX-XX-1234, credit card numbers display only the last four digits, and salaries round to ranges rather than exact values. This approach maintains data utility for analytics while protecting individual privacy.

Establish comprehensive audit logging and monitoring

Audit logging creates an immutable record of every interaction with the data lake, capturing who accessed what data, when, from where, and what operations they performed. This comprehensive visibility serves multiple purposes: compliance reporting, security incident investigation, usage analytics, and performance optimization. Effective audit strategies balance completeness with manageability, capturing sufficient detail for forensic analysis without overwhelming storage and analysis capabilities.

Modern audit implementations capture data at multiple layers. Identity logs track authentication events, including successful logins, failed attempts, credential modifications, and privilege escalations. Access logs record every data retrieval operation, including query text, affected datasets, row counts, and execution times. Administrative logs capture configuration changes, permission modifications, encryption key usage, and policy updates. Network logs provide connection metadata, source IP addresses, geographic locations, and traffic volumes.

Implement data loss prevention and exfiltration controls

Data loss prevention systems monitor and control data movement, preventing unauthorized exfiltration of sensitive information while allowing legitimate business operations. These systems integrate with data lakes through multiple control points, creating defense layers that detect and block exfiltration attempts regardless of method.

Content inspection examines data leaving the lake, scanning for sensitive patterns—credit card numbers, national identifiers, healthcare information, intellectual property markers—using regular expressions, machine learning classifiers, or cryptographic fingerprints of known sensitive documents. When sensitive data is detected in unauthorized contexts, DLP systems can block the transfer, redact the sensitive portions, or generate alerts for security review while allowing transfer to proceed.

Network egress monitoring analyzes traffic patterns to detect large-scale data transfers that might indicate exfiltration. Baseline models establish normal data flow volumes and destinations, flagging deviations like sudden transfers to external cloud storage services, unusual geographic destinations, or high-volume downloads by individual users. Rate limiting can constrain data transfer speeds, making bulk exfiltration prohibitively slow while not impeding normal analytics workflows.

Automate compliance and governance workflows

Compliance automation transforms data lake security from reactive manual processes to proactive policy enforcement that scales with data growth. Automated systems continuously verify that data handling practices align with regulatory requirements, flag deviations before they become violations, and generate documentation for audits without manual compilation.

Policy-as-code frameworks define compliance requirements in machine-readable formats that security systems enforce automatically. A GDPR compliance policy might specify that European citizen data must reside in EU regions, be encrypted with specific algorithms, have access logged, and support deletion within thirty days of request. The system automatically applies these controls to datasets tagged with relevant classifications, ensuring consistent enforcement without requiring administrators to manually configure protections for each dataset.

Data lineage tracking maps information flow from source systems through transformation pipelines to consumption endpoints, documenting processing activities and data location at each stage. This lineage supports compliance requirements around data processing transparency, enables impact analysis for security incidents, and facilitates deletion requests by identifying all locations where specific individual's data appears.

Secure the data lake perimeter with network isolation

Network security establishes foundational controls that prevent unauthorized access to data lake infrastructure, creating isolated environments where data processing occurs behind multiple defensive layers. Proper network architecture reduces attack surface and contains breaches when they occur.

Virtual private cloud deployment places data lake resources in isolated network segments with no direct internet connectivity. Bastion hosts or jump boxes provide controlled entry points for administrative access, themselves protected by strict authentication and monitoring. Private connectivity options like AWS PrivateLink or Azure Private Link enable applications to access data lakes without traversing public internet, eliminating exposure to network-level attacks.

Service mesh architectures implement zero-trust networking within the data lake environment, requiring mutual TLS authentication between all services and enforcing encryption for inter-service communication. Network policies define allowed traffic flows at a granular level, permitting only necessary connections and denying everything else by default. Micro-segmentation isolates different data lake components—ingestion services, processing engines, query interfaces—limiting lateral movement if one component is compromised.

Implement security testing and vulnerability management

Continuous security validation ensures that data lake protections remain effective as configurations change, new vulnerabilities emerge, and attack techniques evolve. Proactive testing identifies weaknesses before adversaries exploit them, enabling remediation under controlled conditions rather than during active incidents.

Vulnerability scanning systems regularly assess data lake infrastructure for known security issues—unpatched software, insecure configurations, weak encryption settings, default credentials. Automated remediation workflows can apply security updates during maintenance windows, adjust configurations to match security baselines, and rotate credentials on schedules. Prioritization engines focus remediation efforts on vulnerabilities with highest risk based on exploitability, asset criticality, and environmental factors.

Partner with Kellton for enterprise-grade data lake security

As enterprises navigate the complexity of modern data architectures, security cannot be an afterthought or checkbox exercise. Our approach combines deep technical knowledge of cloud platforms, regulatory compliance expertise across industries and geographies, and practical experience implementing security at scale for global enterprises.

We understand that effective data lake security balances protection with accessibility, enabling data democratization while preventing unauthorized exposure. From initial security assessments and compliance gap analysis through architecture design, implementation, and ongoing managed security services, Kellton serves as your trusted partner in data protection.

The threat landscape continues evolving, and data lake security requires continuous adaptation and vigilance. Let our proven methodologies and experienced team transform your data lake from a potential liability into a competitive advantage that drives business value with confidence.