Other recent blogs

Let's talk

Reach out, we'd love to hear from you!

By 2028, 55% of enterprise architecture teams are expected to transition from traditional practices of a business-outcome-driven approach (BODEA) to AI-based autonomous governance. The goal here is to create an enterprise future-state architecture. However, despite widespread adoption, many organizations are still struggling to extract meaningful value from their AI investments.

The enterprise data architect has emerged as the critical catalyst in bridging this value gap, transforming raw organizational data into intelligent, autonomous systems that drive measurable business outcomes.

This comprehensive playbook explores proven strategies, frameworks, and methodologies that enterprise data architects use to accelerate AI transformation across complex organizational ecosystems.

What does an enterprise data architect do in AI transformation?

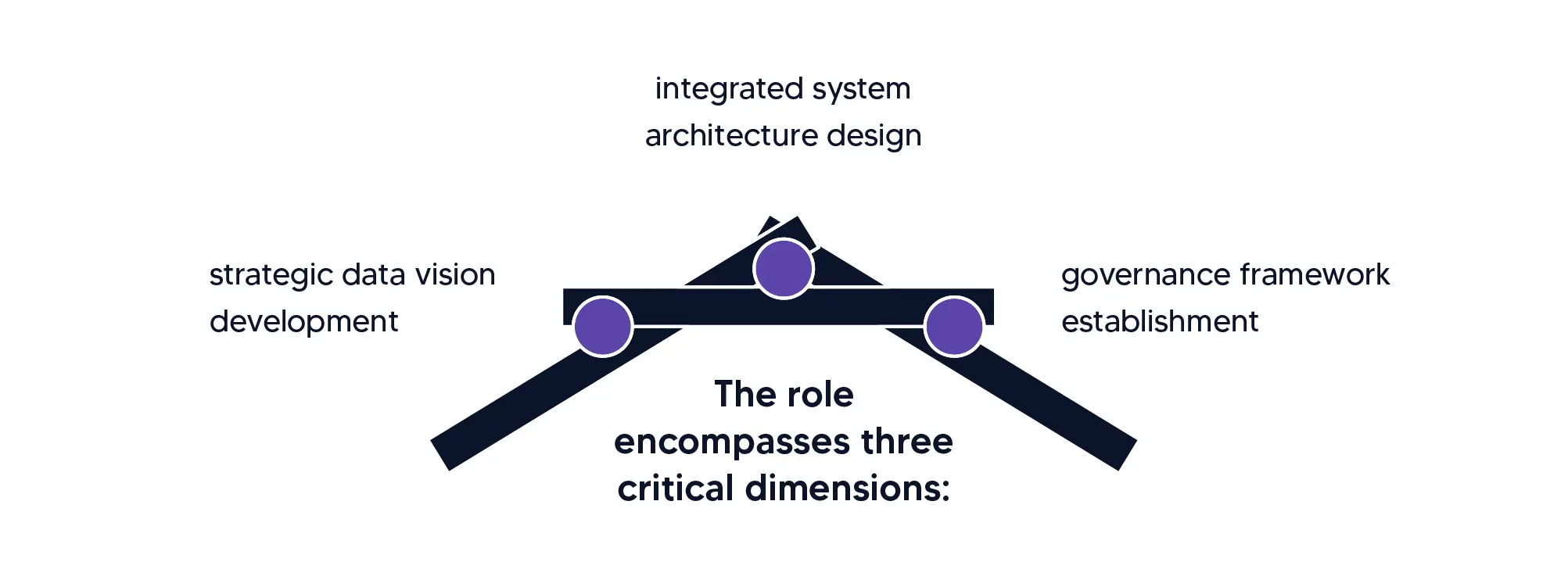

The enterprise data architect serves as the strategic orchestrator of organizational data assets, designing and implementing comprehensive architectures that enable AI systems to operate at enterprise scale. Unlike traditional database administrators or data engineers who focus on tactical implementation, enterprise data architects take a holistic view of how data flows through the organization to support intelligent decision-making.

In the AI transformation context, enterprise data architects become the bridge between business strategy and technical execution. They translate business requirements into architectural blueprints that support everything from basic machine learning models to sophisticated agentic AI systems capable of autonomous reasoning and decision-making.

Enterprise data architects must understand not only the technical aspects of data management but also the business implications of AI-driven automation and intelligent systems. Their responsibilities extend beyond traditional data warehousing to include designing architectures for real-time data streaming, implementing feature stores for machine learning operations, and creating data mesh frameworks that support distributed AI development across business domains.

How can data architect vs enterprise architect roles complement AI initiatives?

The relationship between data architect vs enterprise architect roles creates a powerful synergy for AI transformation success. While enterprise architects focus on organizational alignment and holistic technology strategy, data architects specialize in the technical foundation that makes AI possible.

Enterprise architects approach AI transformation from the business process perspective, ensuring that AI initiatives align with organizational goals, regulatory requirements, and operational constraints. They consider how AI will impact existing workflows, organizational structures, and technology investments across the enterprise.

Data architects, conversely, focus on building the technical infrastructure that enables AI systems to access, process, and learn from organizational data. They design the pipelines, storage systems, and processing frameworks that feed intelligent systems with high-quality, contextually relevant information.

The most successful AI transformations occur when these roles collaborate closely. Enterprise architects rely on data architects' domain expertise to make informed decisions about data-related technologies. In contrast, data architects depend on enterprise architects to ensure their solutions integrate seamlessly with broader organizational systems.

This collaborative model becomes particularly important when implementing complex AI systems that require coordination across multiple business domains, each with unique data requirements and operational constraints.

What makes a data model enterprise architect essential for scaling AI?

A data model enterprise architect brings specialized expertise in designing scalable, reusable data models that support diverse AI workloads across the organization. These professionals understand how to create abstract, flexible data structures that can adapt to evolving AI requirements while maintaining consistency and performance.

The role becomes essential when organizations move beyond isolated AI pilots to enterprise-scale deployments. Data model enterprise architects design canonical data models that serve as the foundation for multiple AI applications, ensuring consistency in how data is interpreted and processed across different systems.

They establish standardized approaches to data modeling that support both transactional systems and analytical workloads, creating unified views of business entities that AI systems can reliably consume. This includes designing master data management frameworks, establishing data lineage tracking, and implementing semantic data layers that make organizational knowledge accessible to AI systems.

Their expertise becomes particularly valuable when implementing federated AI architectures where multiple business units develop their own AI solutions while maintaining data consistency and governance standards across the enterprise.

How does a data solution architect enable modern AI implement ations?

Data solution architects focus on implementing specific, targeted solutions that solve discrete business problems using AI technologies. They translate architectural blueprints into working systems that deliver measurable business value while maintaining alignment with enterprise standards.

In AI implementations, data solution architects design and build the technical components that support specific use cases: customer recommendation engines, predictive maintenance systems, intelligent process automation, and autonomous decision-making platforms. They select appropriate technologies, design integration patterns, and implement monitoring and management capabilities.

Their role involves deep technical expertise in cloud platforms, distributed computing frameworks, real-time data processing systems, and machine learning operations tools. They must understand how to optimize performance, ensure scalability, and maintain reliability for AI systems operating in production environments.

Data solution architects also serve as the primary interface between business stakeholders and technical implementation teams, translating business requirements into technical specifications and ensuring that delivered solutions meet both functional and operational requirements.

How can data and analytics teams accelerate enterprise AI adoption?

Data and analytics teams function as the operational engine driving AI transformation within enterprises. They serve as the critical bridge between high-level architectural vision and practical day-to-day implementation, ensuring AI systems operate reliably in production environments.

These teams accelerate enterprise AI adoption through multiple interconnected mechanisms, including establishing robust data quality monitoring and validation processes, implementing automated data pipeline management systems, and creating comprehensive data observability frameworks that provide essential visibility into data health and overall system performance.

Beyond technical implementation, these teams play a crucial role in organizational change management by working directly with business users to help them understand AI capabilities, providing comprehensive training on new tools and processes, and systematically gathering feedback that informs continuous improvement efforts across the organization.

As the frontline interface between sophisticated technical AI capabilities and tangible business value creation, data and analytics teams implement the operational practices that are fundamental to AI success, including MLOps workflows, automated model monitoring and retraining processes, A/B testing frameworks, and comprehensive performance measurement systems that clearly demonstrate the measurable business impact of AI investments to stakeholders throughout the enterprise.

What are 10 ways a data architect can enable AI transformation?

Modern data architects can enable AI transformation through strategic approaches that address both technical and organizational challenges. Here are 10 proven ways a data architect can bring impact:

1. Implement modern data architecture patterns:

Deploy data mesh and data fabric architectures that support distributed AI development across the organization while maintaining robust governance standards and ensuring consistent data quality across all systems and teams.

2. Create comprehensive data cataloging and metadata management systems:

Develop automated data discovery capabilities, lineage tracking systems, and impact analysis tools that enable AI systems to effectively discover, understand, and utilize available data assets throughout the enterprise.

3. Establish real-time data processing capabilities:

Build streaming data architectures, event-driven systems, and edge computing capabilities that support AI applications requiring immediate decision-making and response by bringing AI processing closer to data sources.

4. Build robust data quality and validation frameworks:

Implement automated data profiling systems, anomaly detection capabilities, and quality scoring mechanisms that ensure AI systems receive reliable, accurate data to produce trustworthy and actionable results.

5. Design and implement feature stores:

Create reusable, consistently defined feature repositories for machine learning models that reduce development time, ensure consistency across different AI applications, and promote standardization throughout the organization.

6. Establish comprehensive data governance frameworks:

Develop policies and systems that ensure AI operations remain within ethical, legal, and regulatory boundaries while maintaining strict data privacy standards and robust security controls across all AI implementations.

7. Implement MLOps and AIOps practices:

Deploy automated systems for the deployment, monitoring, and management of AI systems in production environments, ensuring reliable operation at enterprise scale with continuous performance optimization and issue resolution.

8. Create self-service data platforms:

Build democratized access systems that enable business users and analysts to access data and AI capabilities across the organization while maintaining appropriate governance controls and security measures to protect sensitive information.

9. Establish data observability and monitoring capabilities:

Implement comprehensive visibility systems that track data health, monitor system performance, and observe AI model behavior, enabling proactive identification and resolution of issues before they impact business operations.

10. Build cross-functional collaboration frameworks:

Develop structured approaches that bring together data architects, business stakeholders, and AI practitioners to ensure technical solutions align with business requirements and deliver measurable value that supports organizational objectives and strategic goals.

How do enterprise data architects design for autonomous AI systems?

The design of architectures for autonomous AI systems necessitates a fundamental paradigm shift away from traditional request-response patterns toward sophisticated, continuous event-driven processing models that can effectively support real-time decision-making and adaptive behavior. This transformation requires enterprise data architects to conceptualize and create systems capable of continuously ingesting, processing, and acting upon data streams without requiring human intervention, fundamentally changing how enterprise systems operate and respond to their environments.

The architectural foundation underlying autonomous AI systems must be built upon robust event-driven architectures that possess the sophistication to process continuous data streams while maintaining critical state information across multiple interactions and coordinating complex actions across distributed system components. These architectures must be capable of supporting intricate reasoning workflows that frequently involve multiple AI models working in concert, drawing from various external data sources, and navigating complex decision trees that can adapt and evolve based on changing conditions and learned experiences.

Beyond the technical processing capabilities, enterprise data architects must prioritize the design of explainability and auditability features, ensuring that autonomous systems can provide clear, understandable reasoning for their decisions while maintaining comprehensive logs of all actions taken. This requirement becomes particularly critical for meeting evolving regulatory compliance standards and building the organizational trust necessary for widespread adoption of AI-driven processes, as stakeholders need confidence that these systems are making sound decisions that can be understood and justified when necessary.

What challenges do enterprise data architects face in AI implementation?

Enterprise data architects encounter a complex web of interconnected challenges when implementing AI systems at scale, encompassing technical, organizational, and strategic dimensions that must be carefully balanced. The fundamental difficulty lies in integrating sophisticated AI capabilities with existing enterprise infrastructure while simultaneously maintaining the high standards of performance, security, and reliability that organizations depend upon for their critical operations.

Central to these challenges are persistent data quality and consistency issues that become particularly acute in AI implementations. These systems demand exceptionally high-quality data sourced from multiple disparate systems, each operating with different formats, update frequencies, and quality standards. Enterprise data architects must therefore design intelligent systems capable of automatically detecting, correcting, and preventing data quality issues while ensuring that these protective measures do not compromise overall system performance or introduce new bottlenecks.

The scalability dimension presents another significant challenge as AI workloads demonstrate a tendency to grow both exponentially and unpredictably. This reality demands architectural approaches that can seamlessly handle massive data volumes, accommodate complex processing requirements, and adapt to varying performance demands without causing system reliability degradation or negatively impacting user experience. The unpredictable nature of AI workload growth makes traditional capacity planning approaches insufficient, requiring more dynamic and flexible architectural solutions.