Other recent blogs

Let's talk

Reach out, we'd love to hear from you!

Most enterprises spent years running AI pilots that never made it to production. The blockers were consistent: data compliance failures, unpredictable API costs that spiked during load tests, and security teams rejecting any solution that sent proprietary data to third-party servers. That pattern has now reversed. Organizations deploy AI at scale because Azure OpenAI service eliminated the structural barriers that kept enterprise AI stuck in proof-of-concept limbo.

The Azure OpenAI service eliminates the traditional barriers that kept enterprise AI in pilot purgatory. Organizations previously struggled with data sovereignty concerns, compliance requirements, and infrastructure complexity. Azure OpenAI architecture addresses these constraints directly. It delivers OpenAI's GPT models through Microsoft's enterprise-grade cloud infrastructure with built-in compliance certifications, predictable costs through reserved capacity, and integration with existing Azure services.

This shift matters because the performance gap between AI leaders and laggards is widening. According to 2025 BCG research, companies that deeply integrated AI achieved 2.3x higher revenue growth and 1.8x higher profit margins than late adopters. Azure OpenAI service provides the infrastructure to close that gap.

This guide examines how Azure OpenAI is transforming enterprise operations across banking, insurance, manufacturing, and retail. We'll cover the architecture that enables enterprise deployment, real-world Azure OpenAI use cases, and implementation best practices.

What is Microsoft Azure OpenAI service?

Azure OpenAI service is Microsoft's enterprise offering that provides API access to OpenAI's advanced language models, including GPT-4.1, GPT-5, and O-series reasoning models, through Azure's cloud infrastructure. Unlike direct OpenAI API access, Azure OpenAI runs entirely within your Azure environment with enterprise-grade security controls, compliance certifications, and integration with Azure's ecosystem.

The service launched as a collaboration between Microsoft and OpenAI in November 2021. By early 2026, it had become the dominant enterprise AI platform, with 80% of Fortune 500 companies adopting Azure AI Foundry (the platform that encompasses Azure OpenAI service).

Azure OpenAI service operates as a managed service within Azure Cognitive Services. Organizations deploy specific models to dedicated endpoints in their chosen Azure regions. Each deployment functions as an isolated instance with configurable throughput, content filtering, and access controls.

The January 2026 architecture update introduced significant improvements. Organizations can now use Microsoft Foundry, which provides access to an 11,000+ model ecosystem including OpenAI, Anthropic Claude, Cohere, and open-source alternatives. The v1 API eliminated the need for monthly API version updates, standardized OpenAI client compatibility, and added automatic token refresh without separate Azure OpenAI clients.

Recent model releases demonstrate the platform's rapid evolution. GPT-4.1 launched with 1 million token context windows. The o4-mini and o3 reasoning models provide enhanced chain-of-thought capabilities. GPT-5-chat version 2025-10-03 introduced specialized capabilities for emotional intelligence and mental health responses. For multimodal applications, GPT-image-1.5 and Sora 2 video generation models expanded creative production capabilities.

Why does Azure OpenAI service matter for enterprises?

Let’s delve deeper and understand the core differentiators that distinguish Azure OpenAI from alternative approaches:

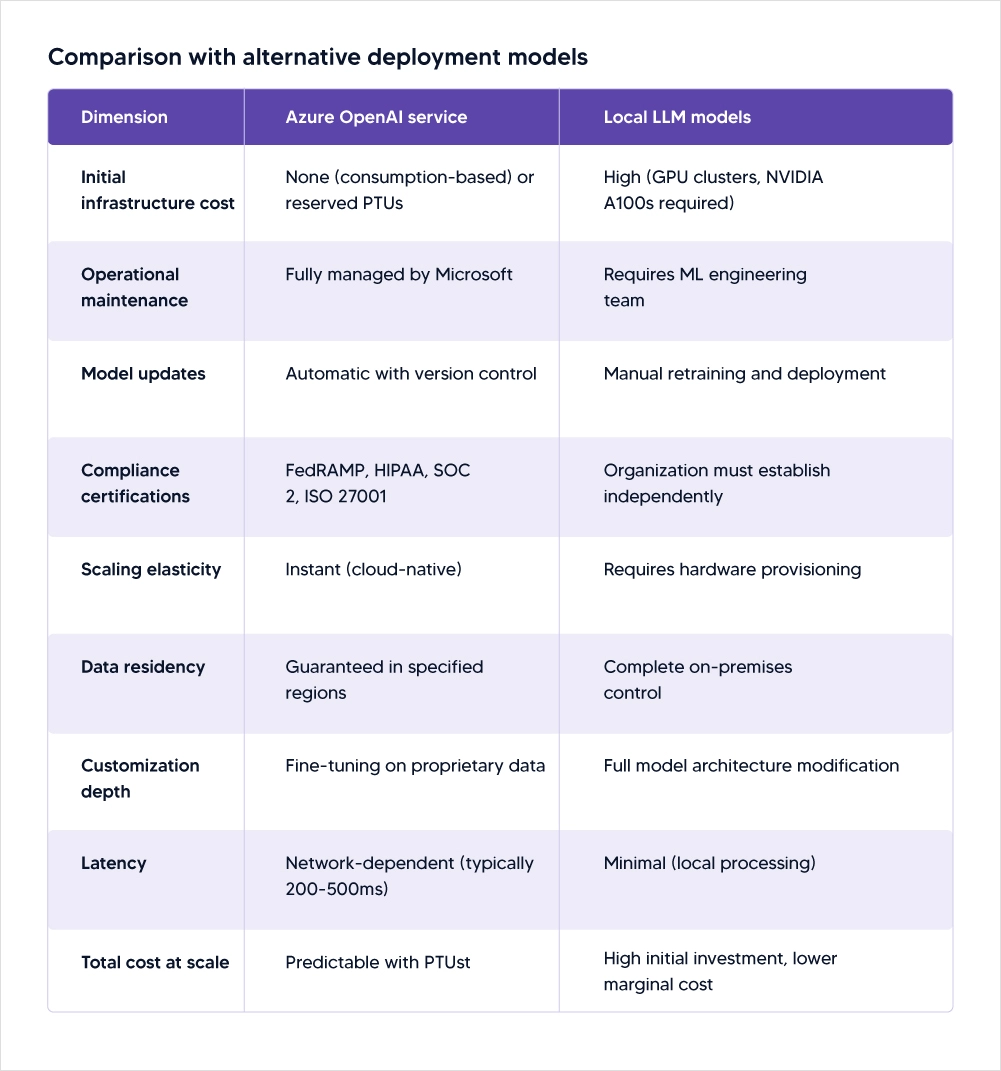

- Data sovereignty and compliance: Azure OpenAI processes all data within your Azure tenant. Customer data never leaves your geographic region or trains OpenAI's models. This addresses the primary concern that blocked enterprise AI adoption. The service maintains FedRAMP High, HIPAA, SOC 2, ISO 27001, and GDPR compliance certifications. For regulated industries like healthcare and financial services, these aren't optional features but deployment prerequisites.

- Provisioned throughput and cost control: Organizations purchase reserved capacity measured in Provisioned Throughput Units (PTUs). This eliminates the variable costs and rate limiting that plague production deployments using consumption-based pricing. A financial services firm processing 50 million customer service interactions monthly can accurately forecast AI infrastructure costs. With direct OpenAI API access, the same workload creates unpredictable expenses that spike during usage surges.

- Native Azure integration: Azure OpenAI connects directly to Azure Active Directory for authentication, Azure Monitor for observability, Azure Key Vault for secrets management, and Azure Private Link for network isolation. Organizations already invested in Azure infrastructure avoid the integration overhead required when adopting standalone AI services.

- Version control and deployment governance: Azure OpenAI uses deployment-based model management. Organizations control exactly which model version serves production traffic. When OpenAI releases GPT-5.1, enterprises test it in development environments before promoting to production. This prevents the subtle behavior changes that occur when OpenAI auto-updates models in their direct API.

- Content filtering and responsible AI: Built-in content filters scan inputs and outputs for harmful content, PII leakage, and policy violations. Organizations configure filter sensitivity based on their risk tolerance. The January 2026 AI Studio Auto-Governance module automates compliance monitoring for regulations like GDPR and emerging AI-specific legislation.

Organizations choose local LLMs when data cannot leave premises or when domain-specific fine-tuning requires architectural modifications. Azure OpenAI wins when time-to-production, compliance requirements, and operational simplicity outweigh customization needs.

How is Azure OpenAI service being adopted across industries?

Organizations aren't deploying AI uniformly across operations. Instead, they concentrate on high-impact use cases where AI delivers measurable ROI.

1. Banking and financial services

Banks deploy Azure OpenAI service across three critical operations. Regulatory compliance teams use the service to analyze thousands of annual regulatory updates, identify relevant changes, and generate compliance impact assessments. Customer service operations leverage AI-powered virtual assistants that integrate with core banking platforms through Azure API Management. Fraud detection teams augment existing models by analyzing transaction narratives, customer communication patterns, and historical fraud cases. The reasoning capabilities of o-series models explain why specific transactions trigger alerts, reducing false positive rates that frustrate legitimate customers.

2. Insurance

Insurance companies deploy Azure OpenAI across claims processing, underwriting, and policy documentation. Claims teams use the service to extract information from claims documents, medical records, and accident reports. GPT-4.1's 1-million-token context window processes entire claim files in a single API call. It helps by letting AI assistants analyze risk factors, identify coverage gaps, and recommend policy terms by connecting to actuarial databases, claims history, and external risk data sources. The system generates underwriting recommendations with explanations that satisfy audit requirements.

3. Manufacturing

Manufacturers apply Azure OpenAI to predictive maintenance, supply chain intelligence, and quality control. Production teams connect the service to IoT sensor data from equipment and analyze maintenance logs, sensor readings, and failure patterns to predict equipment failures. It can help reduce unplanned downtime by 34% by using GPT-4.1 to identify early failure indicators that traditional statistical models missed. Similarly, supply chain teams can leverage this tool to process supplier communications, logistics data, and market intelligence to identify risks before they materialize.

How does Azure OpenAI architecture address common AI adoption challenges?

Enterprise AI adoption historically failed at three specific points: security and compliance validation, production deployment reliability, and cross-functional integration. Azure OpenAI architecture systematically addresses each failure mode.

1. Security and data governance

Azure Private Link enables organizations to access Azure OpenAI service through private IP addresses within their virtual network, ensuring API traffic never traverses the public internet. This network isolation satisfies security requirements for organizations handling sensitive data. Azure Active Directory integration provides role-based access control, conditional access policies, and multi-factor authentication. Organizations grant model access based on user roles rather than managing separate API keys. When employees leave, their Azure OpenAI access terminates automatically through existing identity workflows.

2. Production reliability

Azure OpenAI provides 99.9% uptime SLA with financial penalties for violations. This commitment enables organizations to build customer-facing applications on the platform. By comparison, direct OpenAI API access carries no SLA guarantees. Provisioned Throughput Units eliminate rate limiting that disrupts production applications. Organizations purchase guaranteed capacity measured in tokens per minute. During usage spikes, applications maintain consistent latency rather than hitting rate limits or experiencing degraded performance.

3. Integration and ecosystem connectivity

The v1 API update standardized OpenAI client compatibility. Organizations using OpenAI's Python SDK can swap endpoints to Azure OpenAI by changing the base_url parameter. This portability reduces vendor lock-in and simplifies code migration. Azure OpenAI connects natively to Azure Cognitive Search for RAG implementations, Azure Cosmos DB for vector storage, Azure Functions for serverless workflows, and Azure Logic Apps for process automation. These pre-built connectors reduce integration development time from weeks to days.

4. Cost optimization

Azure Monitor tracks token consumption by user, application, and model. Organizations identify which workloads consume the most tokens and optimize accordingly. One enterprise discovered that 40% of token usage came from a single inefficient prompt template. Rewriting the prompt reduced their monthly Azure OpenAI costs by $47,000. Microsoft's model router automatically selects the optimal model for each request based on complexity. Simple queries route to GPT-4.1-mini at lower cost while complex reasoning tasks use o4-mini. This intelligent routing reduced one organization's costs by 30% while maintaining response quality.

What are the best practices for deploying Azure OpenAI service?

Organizations that successfully deployed Azure OpenAI at scale followed consistent patterns. These practices emerged from deployments at Fortune 500 companies and reflect hard-learned lessons from production failures.

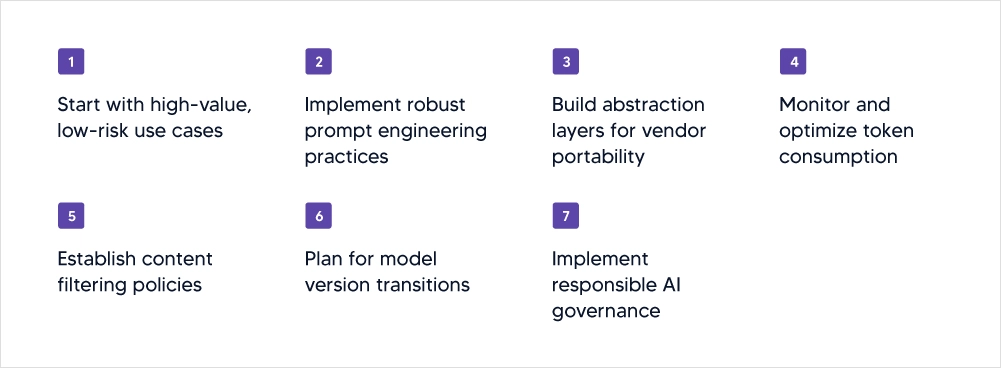

1. Start with high-value, low-risk use cases

Deploy AI in domains where errors create minimal business impact but ROI is immediately measurable. Internal knowledge management, employee support chatbots, and content generation for internal communications fit this profile. These deployments build organizational expertise without exposing customers to AI-generated errors.

Avoid starting with customer-facing applications, financial decision systems, or compliance-critical processes. These domains amplify errors and create reputational risk before teams develop operational competence.

2. Implement robust prompt engineering practices

Production prompts require systematic development. Document prompt templates in version control systems. Test prompts against diverse inputs to identify edge cases. Measure output quality using automated evaluation frameworks before promoting prompts to production.

Organizations with mature AI operations maintain prompt libraries organized by use case. Teams share effective prompts rather than rediscovering solutions independently. This knowledge sharing accelerated adoption velocity across PwC's 200,000-seat deployment.

3. Build abstraction layers for vendor portability

Don't embed Azure OpenAI-specific code throughout your application. Create a unified LLM interface that could swap providers if needed. This abstraction protects against pricing changes, service degradation, or strategic pivots to alternative models.

The abstraction layer should standardize message formats, error handling, token counting, and response streaming across different model providers. Organizations that implemented this pattern migrated between Azure OpenAI and local models in days rather than months when compliance requirements changed.

4. Monitor and optimize token consumption

Token costs compound quickly at scale. Implement logging that captures token usage by user, application component, and model. Identify inefficient prompts that consume excessive tokens without proportional value.

Common optimization opportunities include removing redundant context from prompts, using shorter model names in API calls, compressing conversation history, and caching common responses. Organizations typically reduce token consumption by 25-40% through systematic optimization.

5. Establish content filtering policies

Configure Azure OpenAI's content filters based on your risk tolerance. Financial services organizations typically use high sensitivity to block potentially harmful outputs. Creative agencies may use lower sensitivity to avoid over-filtering.

Test filter configurations against your actual use cases. Filters that work for customer service chatbots may block legitimate content in creative writing applications. Adjust sensitivity settings based on measured false positive rates.

6. Plan for model version transitions

OpenAI releases model updates frequently. Establish processes for testing new versions before promoting to production. Maintain parallel deployments of current and candidate versions. Run A/B tests that measure quality, latency, and cost differences.

Organizations should allocate 10-15% of their AI engineering time to model version testing and migration. This prevents technical debt accumulation when deprecated models reach end-of-life.

7. Implement responsible AI governance

Assign clear ownership for AI system behavior. Document decision criteria for when AI recommendations require human review. Establish processes for investigating AI-generated errors and implementing corrective actions.

Create feedback loops where users can flag problematic AI outputs. Monitor these flags for patterns that indicate systematic issues. Organizations with mature AI governance review flagged outputs weekly and update prompts or filters based on observed failures.

Kellton: Your Azure OpenAI implementation partner

Kellton accelerates Azure OpenAI adoption through proven implementation frameworks developed across 50+ enterprise deployments. Our team combines Azure architecture expertise with deep knowledge of OpenAI model capabilities to deliver production-ready AI systems. Contact Kellton to assess your Azure OpenAI readiness, identify high-ROI use cases, and accelerate deployment timelines. We'll provide a customized implementation roadmap based on your industry, existing Azure footprint, and business objectives.

Frequently asked questions

What is Azure OpenAI?

Azure OpenAI is Microsoft's enterprise service that provides API access to OpenAI's language models through Azure cloud infrastructure with enterprise security, compliance certifications, and integration with Azure services.

Is Azure OpenAI the same as ChatGPT?

No. ChatGPT is OpenAI's consumer web interface. Azure OpenAI provides API access to the underlying models with enterprise controls, data isolation, and integration capabilities for production applications.

What are the methods of Azure OpenAI?

Azure OpenAI supports completions for text generation, embeddings for semantic search, fine-tuning for domain adaptation, and DALL-E for image generation, all accessed through REST APIs or Python SDKs.

What is new in Azure OpenAI?

Recent updates include GPT-4.1 with 1 million token context, o4-mini and o3 reasoning models, GPT-5-chat with enhanced emotional intelligence, unified v1 API, and Microsoft Foundry access to 11,000+ models.

Why use Azure OpenAI instead of OpenAI?

Azure OpenAI provides enterprise security, data residency guarantees, compliance certifications, provisioned throughput for cost control, version stability, and native Azure ecosystem integration that direct OpenAI API lacks.

How does Azure OpenAI bring enterprise-grade AI to the cloud?

Azure OpenAI combines OpenAI's models with Azure's enterprise infrastructure, providing FedRAMP and HIPAA compliance, private network connectivity, role-based access control, audit logging, and 99.9% uptime SLAs.

What is Azure OpenAI vs OpenAI API?

Azure OpenAI runs within your Azure tenant with data isolation and enterprise controls. OpenAI API is a public service where data may be used for model improvement unless opted out.

What are the core features of Azure OpenAI?

Core features include provisioned throughput for guaranteed capacity, content filtering for responsible AI, deployment-based version control, Azure AD authentication, private network access, regional deployment options, and multi-model support through Foundry.